Why We Need Sparse Algorithms

February 25, 2025 · 9 min readI have been working through my own C++/Python implementation of the CSparse routines from Tim Davis’s SuiteSparse package, as described in his book and YouTube lectures. In Chapter 5 of the book, he describes the following experiment:

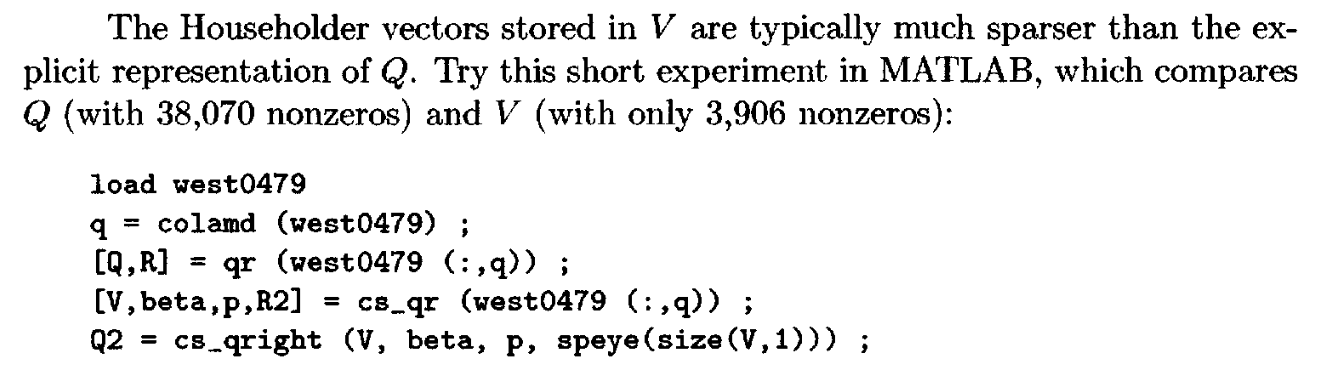

Figure 1.

The MATLAB code from the book to factor the sparse matrix west0479

using the QR decomposition.

For my own implementation, I have been writing the CSparse routines in C++, and wrapping them in Python using pybind11. I have been testing the routines against the MATLAB code that Davis provides in his book, I run the open-source Octave, but the results should be interchangeable with MATLAB. as well as the relevant SciPy packages.

When I tried to run this experiment, however, I ran into a problem. The

matrices Q and V that I generated had 114,811 and 21,791 non-zeros,

respectively, which was a far cry from the 38,070 and 3,906 non-zeros that

Davis reported (and MATLAB produced). The rest of this post explores what went

wrong and how I fixed it.

The MATLAB Results

The matrix west0479 is a 479-by-479 matrix with 1,888 non-zero entries (the

context is described in this MATLAB blog).

I’ll make a slight tweak to the code from

Figure 1

and call the matrix A instead of west0479. We can plot the sparsity pattern

using MATLAB’s spy function. Davis uses the colamd function to reorder the

columns of A using the approximate minimum degree ordering. More on that

topic in a future post, but for now, know that it is a reordering that helps

reduce the additional number of non-zeros (known as fill-in) in the factors

of the matrix. The following code will plot the original A, and its reordered form:

load west0479

A = west0479;

q = colamd(A);

Aq = A(:, q);

spy(A)

spy(Aq)

Figure 2 shows the plots side by side. We can see that the reordering has a significant impact on the structure of the matrix, but the number of non-zeros remains the same.

Figure 2.

The sparsity pattern of the original matrix A and the reordered

matrix A(:, q) using the COLAMD algorithm.

We can also take the QR decomposition of each of the matrices to see the

effects of the reordering. Further, we’re going to use the CSparse function

cs_qr to get the matrix of Householder vectors V, their corresponding

coefficients beta, and a row permutation p. In many applications,

especially sparse ones, we don’t actually want to compute the full Q matrix,

because it is often much denser than the original matrix. Instead, we can use

the sparse V matrix and the beta coefficients to apply the Householder

vectors to, say, a right-hand side matrix B. This point is

exactly the one Davis makes in his excerpt about this experiment.

[Q, R] = qr(A);

[Qq, Rq] = qr(Aq);

[V, beta, p] = cs_qr(A);

[Vq, betaq, pq] = cs_qr(Aq);

Since V is guaranteed to be lower triangular, and R is upper triangular,

we’ll plot them on the same spy plot.

Figure 3

shows the sparsity pattern of the QR decomposition of the original matrix A,

and

Figure 4

shows the sparsity pattern of the QR decomposition of the reordered matrix A(:, q).

Figure 3.

The sparsity pattern of the QR decomposition of the original matrix A.

Figure 4.

The sparsity pattern of the QR decomposition of the reordered matrix

A(:, q).

We can see that the reordering has a significant impact on the sparsity pattern,

as well as the number of non-zeros in the Q and V matrices. We can also see

that the Q matrix is substantially denser than the V matrix in both cases.

In fact, it has an order of magnitude more non-zeros than the V matrix!

The Python Results

With Davis’s point illustrated in MATLAB, I set about implementing the same

experiment in Python. The matrix is not inherently available in SciPy, so

I wrote it to a file and read it into a sparse array. In order to keep the

experiment consistent, I also wrote the colamd permutation vector q to

a file, and read that in as well. See the Appendix for

a discussion of computing the COLAMD ordering directly in Python.

Dense QR Decomposition

The first step is to compute the QR decomposition of the original matrix A.

The scipy.sparse.linalg package does not have an implementation of the QR

decomposition (other than in the background of other functions), so I converted

A to a dense array and used the scipy.linalg.qr function. I also used this

function to get the matrix of Householder vectors V:

import numpy as np

from scipy import linalg as la, sparse

# Load the matrix A and vector q from a file

# A = sparse.coo_matrix(...) # load the west0479 matrix

# q = np.genfromtxt(...) # load the colamd permutation vector

# Permute the matrix

Aq = A[:, q].toarray()

# Compute the QR decomposition

Q, R = la.qr(Aq)

# Get the Householder vectors

(Qraw, beta), _ = la.qr(Aq, mode='raw')

V = np.tril(Qraw, -1) + np.eye(Aq.shape[0])

np.testing.assert_allclose(Q @ R, Aq, atol=1e-10)

The SciPy qr function with mode='raw' returns the raw LAPACK output from the

DGEQRF function, so we need to extract the V

matrix. The upper triangular of Qraw is identical to R.

Figure 4

shows the sparsity pattern of the QR decomposition of the reordered matrix A[:, q].

Figure 4.

The sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], computed with SciPy.

This result is not what we expected. Both the Q matrix and V + R matrices

are much denser than the MATLAB results, even though we are using exactly the

same column ordering. What happened?

The Fix

The problem is that the dense QR decomposition is not the right tool for the job. The dense QR decomposition is designed for dense matrices, not for sparse matrices. Since it operates on every element of the matrix, we get vastly more fill-in of non-zero elements where we should have exactly zero. Although it is inefficient to perform all of these additional operations, we would expect that the result of the dense oepration should still be close to the result of the sparse operation, with the fill-in values taking on exceedingly small numbers due to inexact floating point cancellations. Maybe we can just threshold the values to zero? Let’s try that:

tol = np.finfo(float).eps # ϵ = 2.220446049250313e-16

Q[np.abs(Q) < tol] = 0

V[np.abs(V) < tol] = 0

R[np.abs(R) < tol] = 0

np.testing.assert_allclose(Q @ R, Aq, atol=1e-10)

We add a test to show that we are still close to the original matrix Aq.

Figure 5

shows the sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], with a threshold applied to the values.

Figure 5.

The sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], computed with SciPy, with a threshold applied to the values.

We chose the machine epsilon as the threshold, but we could have chosen any value. The result is still not what we expected. By contrast, the MATLAB results contain extremely small values, showing that they are not thresholded in any way:

>> min(abs(Q(Q > 0)))

ans = 9.3410e-30

>> min(abs(V(V > 0)))

ans = Compressed Column Sparse (rows = 1, cols = 1, nnz = 1 [100%])

(1, 1) -> 3.3759e-17

>> min(abs(R(R > 0)))

ans = Compressed Column Sparse (rows = 1, cols = 1, nnz = 1 [100%])

(1, 1) -> 5.7903e-24

Sparse QR Decomposition in Python

Let’s try a proper sparse decomposition in python. I have implemented the

CSparse routines in C++ and created a python wrapper using pybind11. The

following code will compute the QR decomposition of the reordered matrix

Aq:

import csparse

# ... Load A and q as above ...

Ac = csparse.from_ndarray(Aq) # create a csparse.CSCMatrix

S = csparse.sqr(Ac) # compute the symbolic analysis

res = csparse.qr(Ac, S) # compute the QR decomposition

# Extract the results and put them into a more useable form

V, beta, R, p_inv = res.V, res.beta, res.R, res.p_inv

V = V.toarray()

beta = np.r_[beta]

R = R.toarray()

p = csparse.inv_permute(p_inv)

# Apply the Householder vectors to the identity matrix to get the full Q

Q = csparse.apply_qright(V, beta, p)

np.testing.assert_allclose(Q @ R, Aq, atol=1e-10)

We include a test at the end to ensure that our decomposition actually worked

and reproduces the original permuted Aq.

Figure 6

shows the sparsity pattern of the QR decomposition of the reordered matrix A[:, q].

Figure 6.

The sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], as computed with csparse.

This result is much closer to the MATLAB result (and even has fewer non-zeros)!

The MATLAB code for the QR function is not open-source, so we cannot get exactly the same results.

Conclusions

It is clear from this experiment that sparse routines are critical to producing the expected results on sparse matrices. Although the mathematical theory of decomposing a matrix is the same, we further need to consider the numerical approximations involved in when using a discrete computer. For a deeper discussion of numerical analysis, especially as applied to the Householder version of the QR decomposition, see Trefethen and Bau and Golub and Van Loan. For a more in-depth discussion of the CSparse routines, see Davis.

References

- Davis, Timothy A. (2006). "Direct Methods for Sparse Linear Systems".

- Treftethen, Lloyd and David Bau (1997). "Numerical Linear Algebra".

- Golub, Gene H. and Charles F. Van Loan (1996). "Matrix Computations".

Appendix: Computing COLAMD in Python

There is no way (that I could find) to directly compute a COLAMD ordering in

Python. The closest approach is to use the SuperLU package via

SciPy to compute the LU decomposition as well as the COLAMD ordering. The

following code will compute the COLAMD ordering of a sparse matrix A:

from scipy.sparse import linalg as spla

# ... load A as above ...

# Compute the LU decomposition with COLAMD ordering

lu = spla.splu(A, permc_spec='COLAMD')

q = lu.perm_c # extract the actual permutation vector

Aq = A[:, q] # permute the columns of A

# ... compute QR decompositions as above ...

Figure A.1

shows the sparsity pattern of the original A and this reordered matrix.

Nothing seems particularly out of the ordinary, but it is definitely not the

same ordering as we get from MATLAB (shown in

Figure 2

).

Figure A.1.

The sparsity pattern of the reordered matrix

A[:, q], where q is computed from SuperLU via

scipy.sparse.linalg.splu.

Figure A.2

and

Figure A.3

show the sparsity pattern of the QR decomposition of this reordered matrix

A[:, q] as computed with SciPy and csparse. It is certainly not the nice

sparse pattern that we see from the MATLAB results or my own CSparse

implementation.

Figure A.2.

The sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], where q is computed from SuperLU via

scipy.sparse.linalg.splu, and QR is computed with scipy.linalg.qr.

Figure A.3.

The sparsity pattern of the QR decomposition of the reordered matrix

A[:, q], where q is computed from SuperLU via

scipy.sparse.linalg.splu, and QR is compute with csparse.

I am not quite sure what to make of these results without diving further into the SuperLU algorithm. Presumably, the COLAMD permutation used is optimized for LU decomposition, which gives a different ordering than the one used for QR decomposition. I will investigate this issue further in the future.